For this post, I’m going to write about infrastructure and it’s value (both positive and negative) in the lifecycle of developing software. My goals are to write this in a way that applies to virtually any project, large or small across a divers set of technologies, but ultimately I’ll be speaking from my most recent experiences which are with reasonably large scale (100s of developers) projects.

Since the term can easily mean different things to different people, I’ll start with defining the term…wikipedia is always a good place to start, so here’s their entry for the word (in the generic sense, not necessarily software specific):

Infrastructure is the basic physical and organizational structures needed for the operation of a society or enterprise,[1] or the services and facilities necessary for an economy to function.[2] The term typically refers to the technical structures that support a society, such as roads, water supply, sewers, electrical grids, telecommunications, and so forth. Viewed functionally, infrastructure facilitates the production of goods and services; for example, roads enable the transport of raw materials to a factory, and also for the distribution of finished products to markets and basic social services such as schools and hospitals.[3] In military parlance, the term refers to the buildings and permanent installations necessary for the support, redeployment, and operation of military forces.[4]

If we convert that to “software speak” we can bucket at least file the following as infrastructure (note: there may be more, just going for the obvious ones here):

- Build hardware and software – all the tools + scripts, and the hardware they run on, that are required to take your code and turn it into a deployable package (i.e. a binary for a phone app, or a collection of Javascript and PHP files for a web app).

- Deployment hardware and software – all the tools + scripts, and the hardware they run on, that are required to get your deployable package and, well, deploy it onto representative hardware. Note that I’m being careful not to assume we’re just talking about web sites and web services here, deployments can go to phones or other non-server hardware too.

- Test hardware and software – all the tools + scripts, and the machines they run on, that are required to test your deployed packages.

- Reporting hardware and software – ultimately all of the above need a place to record results, both to allow for diagnostics, but also detecting trends over time, this all falls into your reporting infrastructure.

- Source control and work item tracking hardware and software – projects of any reasonable complexity need a way to track bugs and or tasks (many smaller teams track the latter in more Agile ways), as well as source code.

That looks like a good list to start with, so now the onto the “value” part of the post. Why do software development projects need all this stuff? Why do we spend time and money on infrastructure when in the abstract, it doesn’t all add direct value to the end product? The value of infrastructure in software, is just like the value of infrastructure in the broader sense (using the definition from Wikipedia above)...it allows us to do our jobs, and poor infrastructure can often prevent us from doing our jobs. I will readily admit that there’s a point of diminishing returns with infrastructure, but at least in my experience (which spans working at large and small companies) we too frequently forget how valuable good infrastructure is. For example, imagine the scenario where you have a team of say 6 developers, all working on a feature that involves:

- Developing a database to store data (aka data tier)

- Developing a service to retrieve the data over HTTP (aka services tier)

- Developing a web page to render the data in a browser (aka client tier)

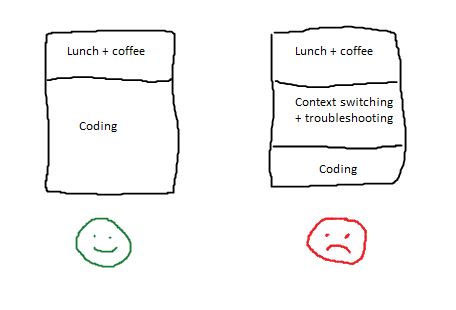

Sounds pretty simple and the kind of system that many people work on everyday. A feature that involves enough complexity to require 6 developers to work on it will need some coordination and lots of testing, so we’ll need a way to coordinate the work across the team. This coordination needs to happen both inside a tier (so two or more devs can work on the data tier simultaneously) and across tiers (so two or more devs can work on client/services interactions). There’s more than one way to solve this problem but typically you’d want some infrastructure (as defined above) to allow both the collaboration within a tier and integration across tiers. If everything (builds, testing, deployment) is working great, the devs can happily check in their code, wait a short period of time for it to build, wait another short period of time for it to pass testing and then have it automatically deployed to a server so others can test and/or integrate with their changes. Since there are several stages in the infrastructure pipeline and we already know that context switches are expensive, it behooves the business to have that pipeline execute both expediently and reliably. If it’s slow, developers will go and do something else while they wait, and the cost of getting back into both the original task as well as the task they get into later goes up the longer it takes to finish. If it’s unreliable, developers will have to spend time troubleshooting and fixing the infrastructure when they could be writing code that directly adds value to the business/feature.

Since most developers don’t work for free, what would you rather have your team doing, writing code for your feature, or waiting on slow infrastructure or troubleshooting unreliable infrastructure?